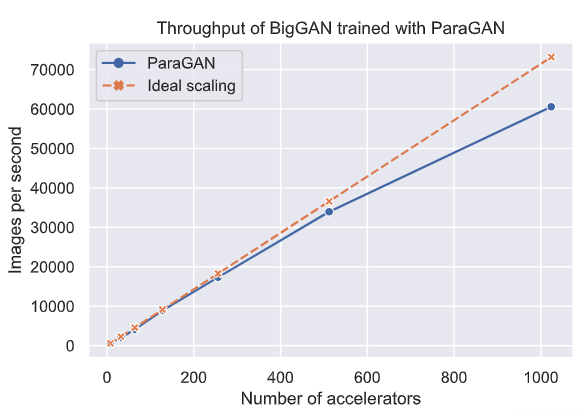

ParaGAN scales to 1024 TPU cores at 91% scaling efficiency.

ParaGAN scales to 1024 TPU cores at 91% scaling efficiency.Abstract

We present ParaGAN, a cloud training framework for GAN optimized from both system and numerical perspectives. To achieve this, ParaGAN implements a congestion-aware pipeline for latency hiding, hardware-aware layout transformation for improved accelerator utilization, and an asynchronous update scheme to optimize system performance. Additionally, from a numerical perspective, we introduce an asymmetric optimization policy to stabilize training. Our preliminary experiments show that ParaGAN reduces the training time of BigGAN from 15 days to just 14 hours on 1024 TPUs, achieving 91% scaling efficiency. Moreover, we demonstrate that ParaGAN enables the generation of unprecedented high-resolution (1024×1024) images on BigGAN.

Type

Publication

In ISCA’23 ML for Computer Architecture and Systems (MLArchSys) Workshop