Biography

I am a fifth year Ph.D. student at the National University of Singapore, jointly advised by Prof. Jialin Li and Wei Lin. My primary research interest is in developing highly efficient distributed systems for machine learning. I have previously interned at Google XLA, Alibaba Platform for AI (PAI) team, and Apple Turi.

During my undergraduate studies, I had the privilege (and fun) of spending four years with the NTU HPC club, where we won the Overall Championship at SC'17 and set the LINPACK World Record. I’ve hosted a MLSys seminar.

Outside of my academic pursuits, I enjoy cooking (menu), jogging, and ham radio. My Erdős number is 5.

Download my resumé.

- Large-Scale Machine Learning

- Automatic Distributed Training

- Distributed Systems

Ph.D. Candidate, Computer Science, 2021 - Current

National University of Singapore

Bachelor of Engineering in Computer Science, 2015 - 2019

Nanyang Technological University

Visiting Student, Fall 2016

New York University

Publications

We present a predictive KV cache offloading mechainism that support ultra-long decoding phase in reasoning and agentic workloads.

We present a framework that drastically speeds up the process of deriving the tensor parallel schedule for large neural networks by 160x.

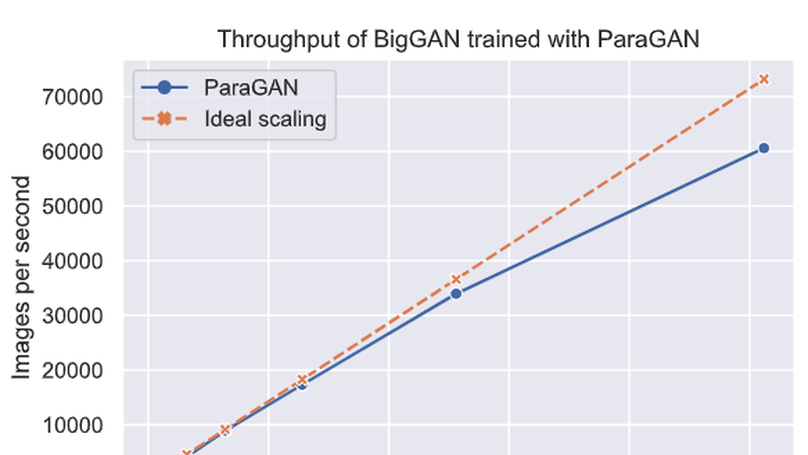

We present ParaGAN, a cloud-training framework for GAN, which demonstrates near optimal scaling performance over thousands of acclerators with system & training co-design.

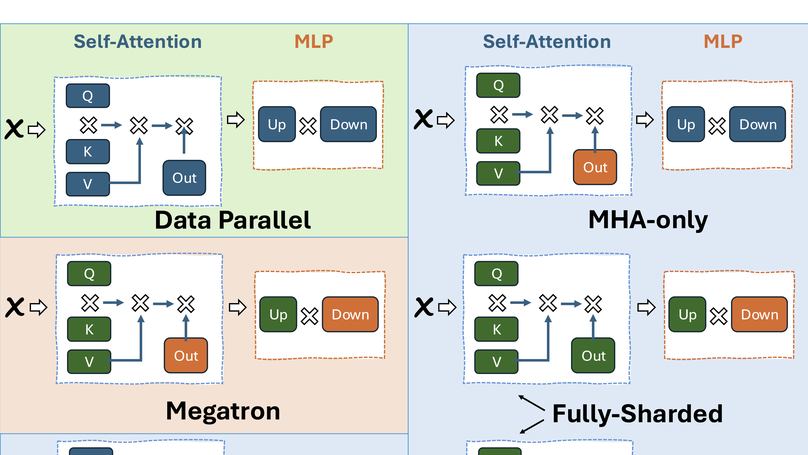

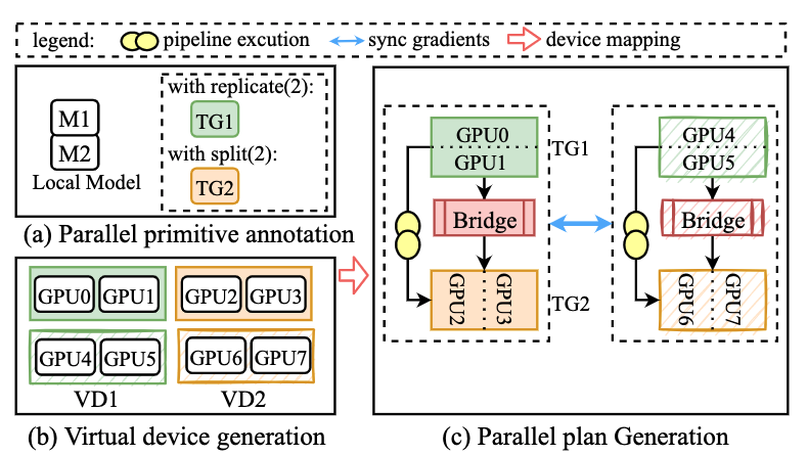

Whale is a highly scalable and efficient distributed training framework for deep neural networks, introducing a hardware-aware parallel strategy and user-enabled model annotations for optimising large-scale model training, demonstrating its prowess by successfully training a multimodal model with over ten trillion parameters on a 512-GPU setup.

We propose an efficient parameter sharing strategy for Transformer architecture by replacing FFN with MoE layer and sharing the trainable parameters except the normalization layers. Competitive performance across CV and NLP tasks were achieved with up to 6x reduction in the numbers of unique parameters.